In machine learning, the model is only as good as the data you feed it. But when time is involved, "good data" isn't just about clean values—it's about structure. How much of the past does the model need to see? How far into the future are you trying to peer?

Understanding concepts like Lag Time, Prediction Horizon, and the distinction between variable types is what separates a basic regression from a powerful time series forecast. Let's break down these concepts using a practical interface.

1. The Architecture of Variables

Before we talk about time, we must talk about the actors in our play. Not all data columns serve the same purpose.

Endogenous vs. Exogenous

Endogenous (Target): This is the "What". It is the variable influenced by other variables in the system and the one you are trying to predict. In a stock price model, the stock price itself is endogenous.

Exogenous (Features): This is the "Why". These act as external drivers—independent variables that affect the model but aren't affected by it (at least in the short term). For example, "Day of Week" or "Holiday" flags.

Categorical Features

Machine learning models speak math, not English. Categories like "City" or "Product Type" must be translated into numbers through Encoding (e.g., One-Hot Encoding).

Pro Tip: You can choose to apply preprocessing to just the target, just the features, or both. Often, you want to scale your inputs (exogenous) for stability, but keep your target (endogenous) in its original unit for interpretability.

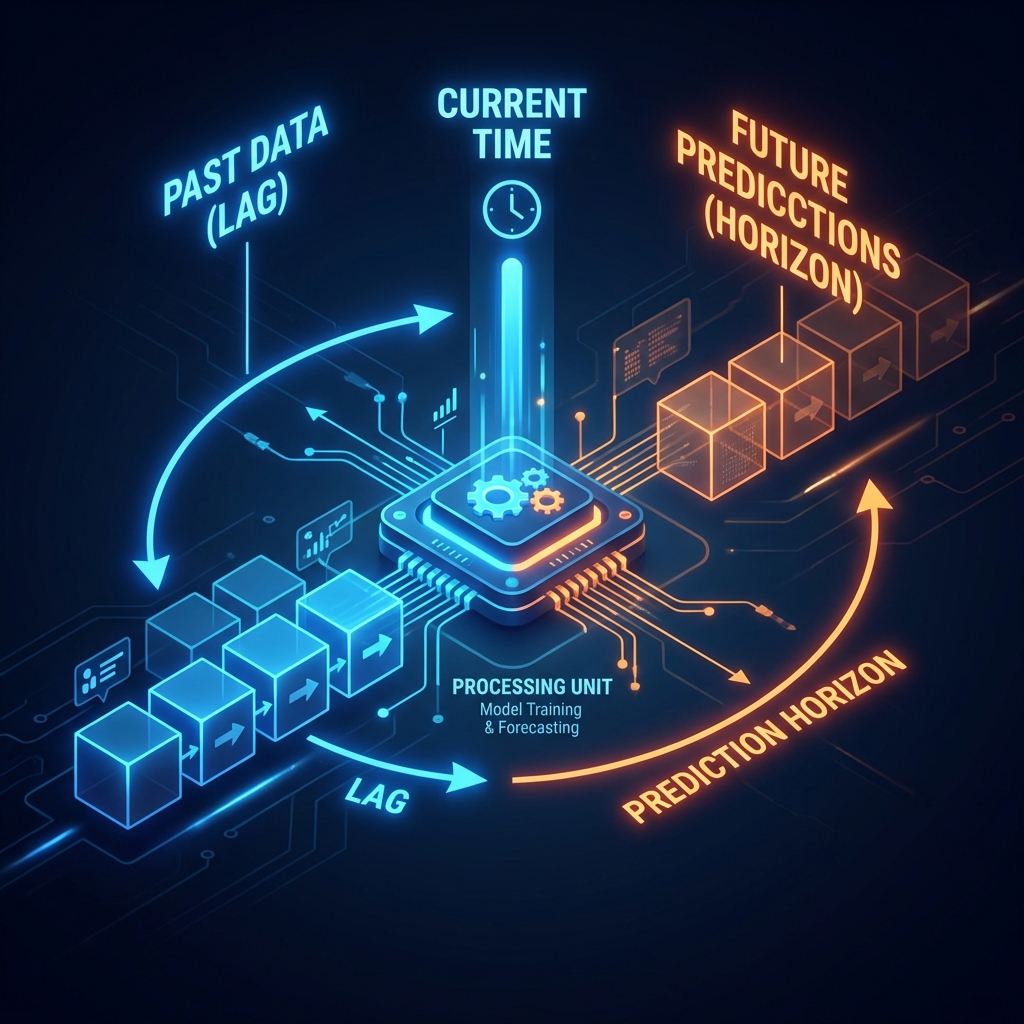

2. Time Series Mechanics: Lag & Horizon

This is where the magic happens. Time series forecasting isn't just predicting y from

x. It's predicting y(t) using y(t-1).

Lag Time: The Model's Memory

Lag Time defines how far back into the past the model looks to make a decision. If you have a Lag of 7 days, the model uses the last week's data to predict today.

If your Lag Time is set to 0, the model has no "memory" of previous steps. It treats every row as an isolated event. This essentially turns your problem into a standard regression (cross-sectional) task, stripping away the temporal context that defines time series forecasting.

Prediction Horizon: The Scope

The Prediction Horizon is how many steps ahead into the future you want to forecast.

- Horizon = 0 (or 1): Short-term. Predicting the immediate next step (e.g., tomorrow's weather).

- Horizon = 30: Long-term. Predicting the trend for the next month. As the horizon increases, uncertainty naturally grows.

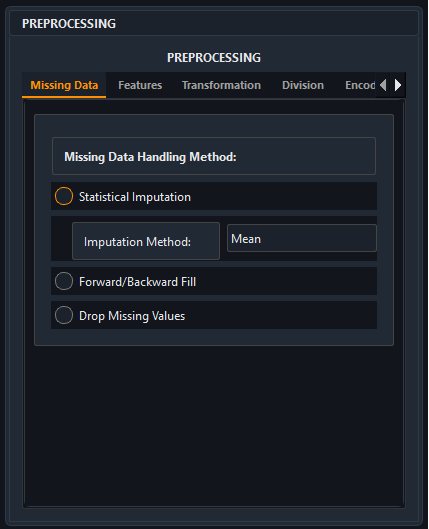

3. Configuring for Success: A Practical View

Handling these settings manually can be tedious. In modern platforms like AIMU, these complex engineering steps are reduced to intuitive sliders.

In the interface shown above (AIMU), notice how the workflow is streamlined:

- Categorical Selection: Clearly separate your target and feature processing.

- Time Config: A simple slider sets the Lag Time, instantly creating the windowed dataset needed for training.

- Horizon: Define your forecasting goal with a single input.

Conclusion

Feature engineering in time series is a balancing act. Too little lag, and you miss the trend. Too much, and you introduce noise. By clearly defining your Prediction Horizon and carefully selecting your Lags, you transform raw chaotic data into a structured signal ready for high-performance modeling.

Ready to experiment?

Download the latest version of AIMU to access these advanced preprocessing tools and visualize their impact on your models in real-time.